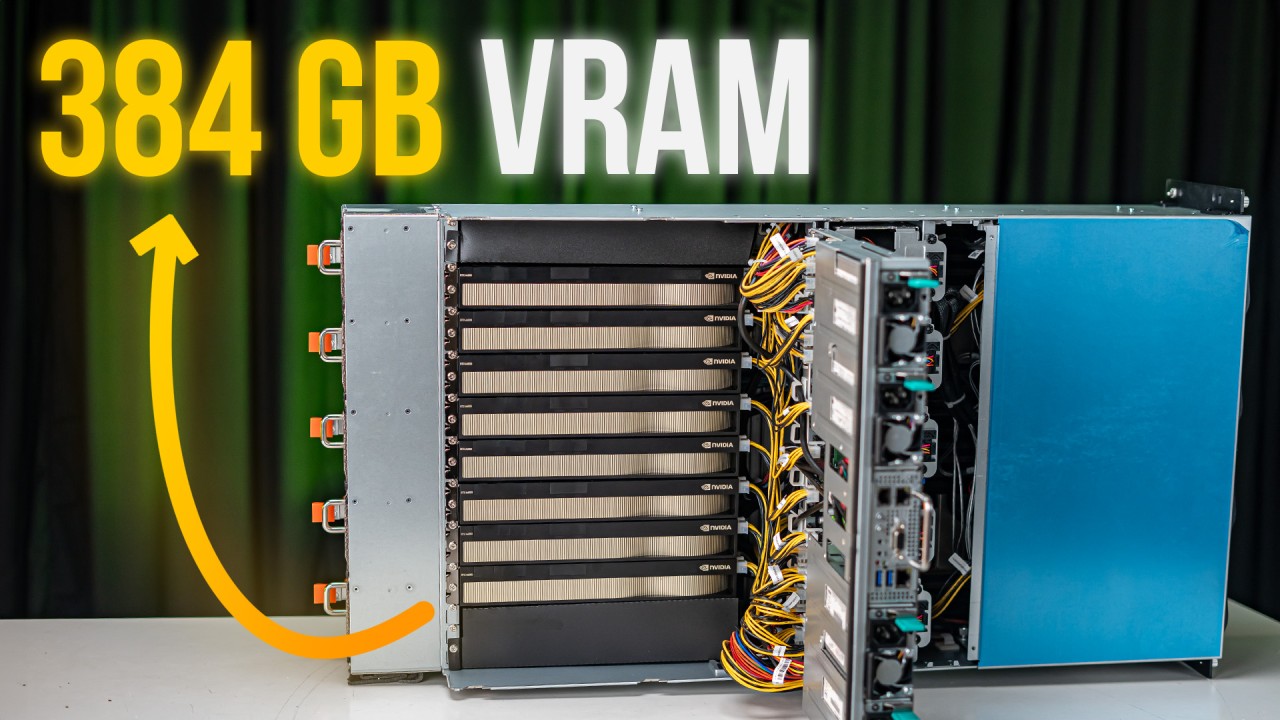

This!! is a GPU Server, with 8x RTX A6000s – that’s 384 GB of VRAM in a single server purpose-made for Deep Learning. We’re comparing this with traditional Cloud Services, and also going through the Hardware Selection – and everything you need to know to build your own Deep Learning Server.

So before talking about the Hardware for building this… Monster – let’s talk about why you would need something like this.

The Number 1 Reason is Cost.

Now, if you’re using AWS or Microsoft Azure for a long period of time. You will know how quickly cloud services cost can add up, giving you a really huge monthly bill. And there is no alternative to this – unless you build your own local server.

Getting your own Hardware, means a one-time payment for whatever Compute Requirement you have. Which Gets your Monthly Operating Cost really, really, low.

Second Reason is Data Security. Unlike Cloud – where you have no idea about how your data is being used. Local Servers give you that peace of mind on security – because no one has access to it except you. But how do you know what hardware you need? you need to answer 2 questions for this,

How much Compute you need and how much memory you need? Starting with Compute, which mainly depends on GPUs.

If you’re just starting out – then the best option for you is obviously to use the free trials, and just get things started.

But if you’re someone who’s constantly finding new models, and you mainly deal with Hyperparameter Search.

Then your main metric is Training Throughput. Getting the GPUs with the highest Training Throughput in your budget is the best way to begin your Hardware List. Some good options are obviously the latest Ada Series from Nvidia – in this particular build we have 8 Nvidia RTX A6000s having a total 384GB of VRAM.

So once you’ve finalized which GPU you want and how many of them – now you need to choose a barebone or the platform.

Intel Xeon & AMD Epyc are the 2 main platforms in Servers along with Threadrippers also being used as the entry-level option.

After finalising the platform, There are other factors to consider like how much storage you need? Do you need a single CPU or dual CPU? Do you need a tower cabinet or want to mount in a server Rack. what’s the network throughput you need? and if you need ECC memory.

Based on all these factors – our configuration team can help you get the exact hardware for your requirements.

Now similarly if you’re not training but looking for inference – you need the GPU which has the fastest inference for your particular Model. Nvidia’s H100, A100 & L40s are great GPUs for Inference. So choose the GPU that fits your usecase the best – and build your list from there.

Once you have the hardware, you’ll have to set it up with the infrastructure you need. But that’s all you need to know from the hardware side.

Let us know if this Hardware Guide was helpful for building your own Deep Learning Server.

We’ve been providing Hardware Solutions to AI Companies & Power Users for the pa your Never Ending Cloud Bills – you know who to get in touch with.

Leave a Reply