You’ve probably seen it on a spec sheet or heard it during one of those product launches: “This laptop delivers 40 TOPS of AI performance.”

Sounds impressive, right?

But here’s the truth. Most of those flashy numbers mean nothing in the real world. In fact, AI TOPS is more of a marketing gimmick than benchmark.

Let’s break it down.

What is AI TOPS?

TOPS stands for Tera Operations Per Second. Basically, it’s a way of saying how many simple calculations a chip (like an NPU or GPU) can perform every second. One TOP is a trillion operations which does sound powerful, but there’s a catch.

TOPS is a theoretical maximum. It’s like saying your car can go 320 km/h if the wind is right and you’re driving downhill. It has almost nothing to do with how your system will perform during an actual AI task.

Worse still, hardware makers rarely tell you what precision was used to get that TOPS number. And that’s where things start getting sneaky.

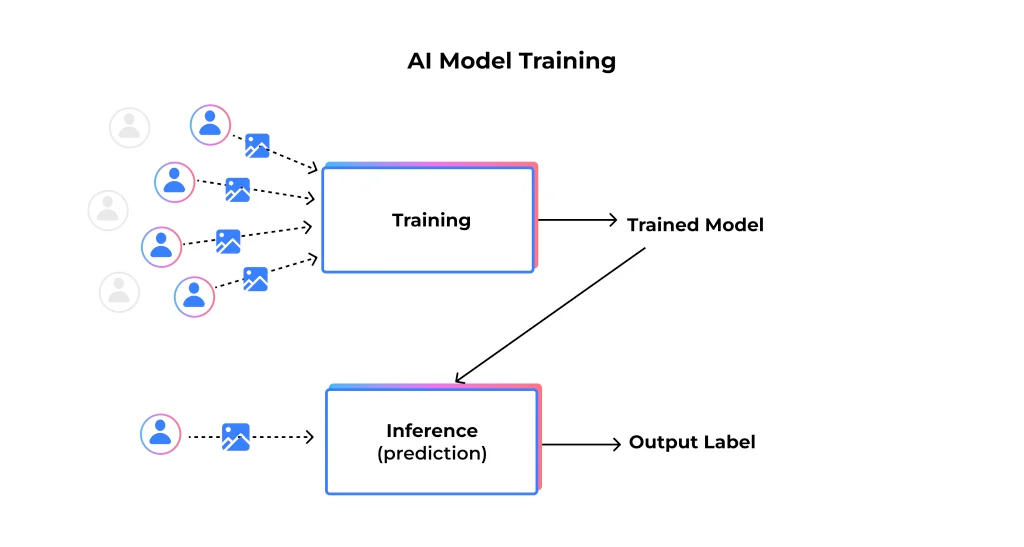

Training vs Inference — Know Your Use Case

Before we talk about hardware, let’s get clear on the kind of AI work you’re doing. There are two main types: training and inference.

Training is when you’re building or fine-tuning a model which is feeding it data, letting it make mistakes, and updating its internal weights millions of times over. This is the heavy-duty stuff. You need powerful GPUs, tons of VRAM, and serious cooling. Think FP16 precision, stable math, long runtimes. It’s not something your average laptop is built for.

Inference, on the other hand, is when the model is already trained and you’re simply using it to get results. You feed it an input and get an output which includes translation, image recognition, transcription, that sort of thing. This is where smaller, more efficient chips can help at least in theory. But most “AI laptops” with fancy NPUs are barely being used. In many cases, inference still happens on the cloud.

Precision Matters More Than You Think

This might get a little technical but AI computations can be done in different precisions basically, how detailed each number is. More precision means better accuracy, but also more memory and slower processing.

- FP16 (16-bit float) is the standard for training which has a good balance of range and accuracy.

- FP8 is smaller and faster but with a slight drop in quality.

- INT8 (8-bit integer) is common for inference which is fast, light, and surprisingly accurate if calibrated well.

- INT4 or FP4 are extremely compressed, letting huge models run on smaller hardware, but you’ll definitely feel some accuracy loss, especially for reasoning or math-heavy tasks.

Here’s the problem: when companies boast about “40 TOPS of AI power,” they’re usually quoting the lowest precision a lot of the times they use INT4. It makes the number look big, but it says nothing about what you’ll actually experience.

So unless your app is running on that exact precision (and most aren’t), that TOPS number is meaningless.

Why the Marketing is Misleading

Let’s be honest, most spec sheets are written to impress, not to inform. AI TOPS numbers are no exception.

You’re often not told what precision the measurement is based on. It could be INT4, INT8, FP16, each of which gives a different result. You’re also looking at peak performance, not what the system can sustain over time. Some vendors go a step further and combine the CPU, GPU, and NPU performance to get a nice, inflated “system TOPS” figure. Sounds great on paper until you realize none of your apps are using all those processors in that way.

To make matters worse, a lot of AI software doesn’t even tap into those fancy NPUs. Unless you’re using very specific, optimized workloads, that chip might be sitting idle while most of your processing still happens on cloud.

What Actually Matters for AI Performance

Forget the TOPS. Here’s what you should actually be paying attention to.

First, does the model fit into your VRAM? That’s non-negotiable, especially when you’re working with local LLMs or diffusion models. If your GPU can’t hold the model, you’re not going anywhere.

Next, look at throughput. How many tokens per second or images per second is your system actually processing? That’s a real metric you can feel.

Also, latency matters. It’s not just about how fast your machine can be, it’s about how responsive it feels when you’re actually using it.

And finally, try different precisions on the same model that is FP16, INT8, etc and see how they affect both speed and output quality. That’ll tell you more than any marketing slide ever will.

So What Kind of PC Do You Actually Need?

That depends entirely on your use case. If you’re just running a tiny local chatbot or playing around with transcription models, you’ll do fine with a mid-range GPU, think 12 to 16 GB of VRAM, a Ryzen 7 or Core i7, and about 32 GB of RAM.

Want to run something like GPT-OSS with 20 billion parameters? You’re going to need at least 16 GB of VRAM, ideally an RTX 4070-class card, and 64 GB of system RAM. Your CPU should be able to keep up too, Ryzen 9 or Core i9 is where it gets comfortable.

And if you’re eyeing the big stuff like the 70B+ models, full-precision inference, or even training, you’re moving into workstation or multi-GPU territory. You’ll want 48 GB VRAM or more, possibly across two or more cards, and at least 128 GB RAM. That’s the data-center level. But even then, with smart quantization (dropping to INT4 or INT8), you can make magic happen on high-end consumer hardware.

Still Confused?

We have been in the business a little over a decade and have taken the AI craze head-on before people even knew about chatbots. Like this PC we have built specifically for AI all the way back in 2018, yeah that was 7 years ago! So, if you’re in the need to power your AI based solution, you know who to get in touch with.