Generative AI and Transformer Neural Networks stand at the cusp of the New AI Era.

Unlike RNNs (recurrent neural network) – Generative AI trains & runs in parallel. Which means we need new Hardware designed to be perfectly aligned with the new Transformer Architecture – for faster and more accurate training.

Enter-in Nvidia L40s GPU Server – with a dedicated Transformer Engine.

We configured this server for one of our clients to speed-up training of their ML model that delivers real-time data analysis from satellite imagery – serving industries like Climate, Agriculture & Infrastructure – for solving Global sustainability challenges.

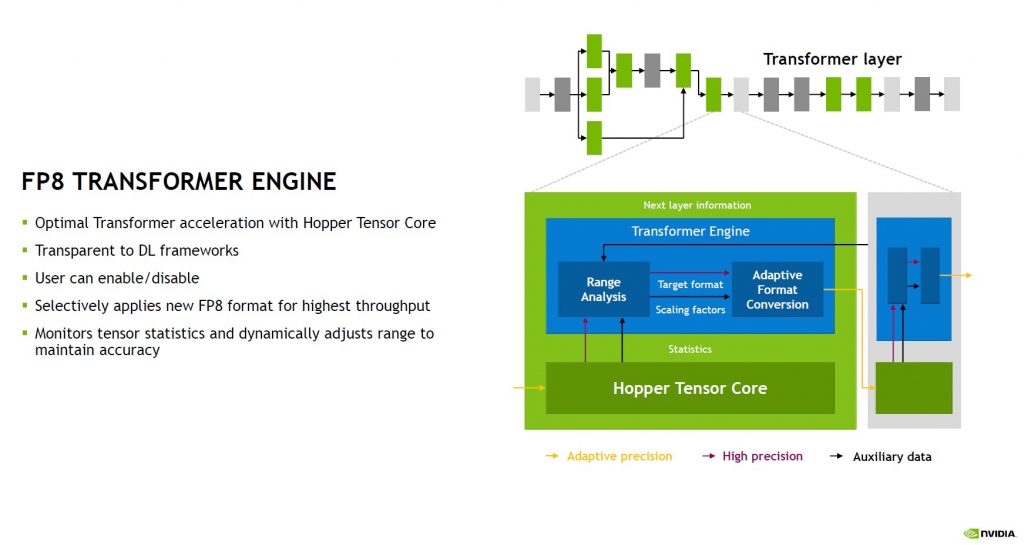

What is a Transformer Engine?

Transformer Engine enables full-throttle parallel processing in your GPU. It improves memory utilisation for both training and inference. It also intelligently scans the layers of your transformer architecture networks and automatically reallocates FP8 and FP16 units – Delivering faster training and inference for Generative AI models.

Hardware behind AI

In the 2023 AI Gold-Rush – GPUs have become the shovels. Even buying a Consumer GPU has become difficult and expensive compared to the previous 3 generations of Nvidia Launches.

For building AI models with faster training times – the standard approach is AWS, which gives fractional compute performance using High-performance, AI-specific Hardware like the Nvidia A100 or H100 Servers – that’s almost impossible to buy one for yourself since it requires you to purchase the entire server with not 1 or 2 – but a total of 8 GPUs interconnected via the NVLink.

This demands a hefty investment upfront. And to top it off, they are so application-specific that you can’t even get a display out of those “Graphic” cards.

On the flip side, cloud solutions like AWS offer a low entry cost but the monthly bills quickly stack up, making it really expensive in the long run.

Finally, you have consumer grade chips like The RTX 4090 or its enterprise version, RTX 6000 Ada – both of which are nowhere close the L40s in terms of performance.

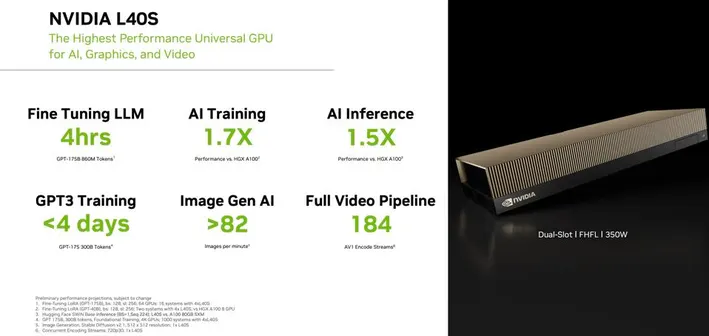

However, the Nvidia L40s GPU sits right in between, giving you the flexibility as you go – It’s a regular PCI-e GPU that can fit in any modern Server.

Benefits of Nvidia L40s

So Not only is this effective in cost since you could buy 1 and increase as you go. but also it is general-purpose.

Because the high-performance of L40s can also be used for running molecular dynamic simulations, rendering complexly textured ray-traced environments, and handling any other workloads requiring massive amounts of data (like running Cyberpunk 2077 Path-Tracing Mode 🧐🧐).

In Fact it has 4 display outputs as well with 5K60FPS support. It’s a GPU that can integrate in your IT Setup as simply as a hot-swap – but with all the power that tech-enabled businesses require.

Ok but surely it is not as good as the AWS server GPUs right? Wrong – Nvidia L40s is based on the latest Ada Lovelace Architecture, and it’s latest generation Tensor & CUDA cores are actually much faster and better compared to its predecessors like the A100 and H100.

Simply put, The Nvidial L40s is the best solution available for MSMEs looking to get started with Generative AI due its easier accessibility, best performance and lower cost of entry.

If you’re looking for a similar solution for yourself – you can get in touch with our subject matter experts, and we can help build a robust, cost-effective server solution tailored to meet your needs. Your journey into the world of Generative AI is just a call away.

Leave a Reply