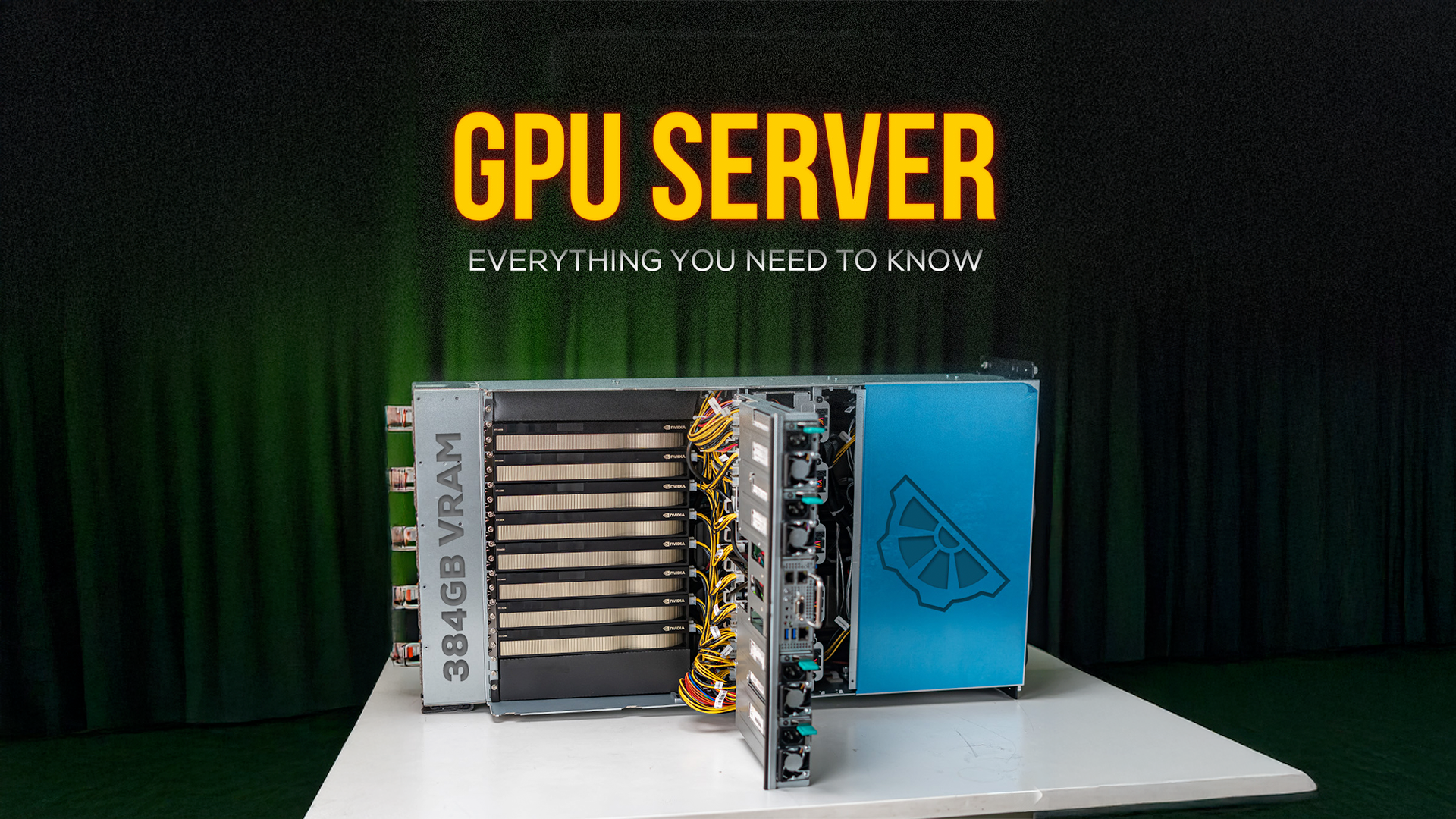

This is a GPU server with eight RTX A6000 GPUs. Together, they provide 384 GB of VRAM and over 86,000 CUDA cores. This class of hardware is what powers deep learning and generative AI. The AI tools people use every day, be it image generation systems, large language models, and recommendation engines which are run inside data centers filled with thousands of GPU servers like this one. What you are seeing here is a single building block of those systems.

Why This Exists

Before discussing the hardware required to build a server like this, the reason such a system exists needs to be clear.They are built because the economics and the risk profile of the cloud change completely once workloads become serious and continuous.

Reason One: Cost Becomes the Main Problem

Anyone who has used AI or machine learning workloads on cloud platforms for long periods knows how quickly the bills grow. In many real-world cases, cloud compute can be up to ten times more expensive than owning equivalent hardware, even when only subscription fees over a single year are considered. Extend that usage over four years and the cost difference can grow to several dozen times the price of buying hardware outright.

Owning hardware changes the cost structure completely. Instead of recurring monthly bills that keep rising, the expense becomes a one-time capital investment. Monthly operating costs drop sharply, limited mostly to power, cooling, and basic maintenance. For long-term workloads, this shift alone can justify building a dedicated server.

Reason Two: Ownership and Control Matter

When cloud services are used, all data is uploaded to third-party servers. This includes training datasets, proprietary code, and the complete architecture of AI models. There is no real visibility into how that data is stored, analyzed, or potentially reused. If someone is building a valuable AI system, there is always the risk that the cloud provider benefits from that work before the creator does.

A dedicated server is a private asset. No external party has access to the data unless explicitly allowed. For companies, this hardware is also a balance-sheet asset that can be depreciated over time, improving financial efficiency while maintaining full data control and peace of mind.

The Two Questions That Decide the Build

Choosing the right hardware starts with answering two questions: how much compute performance is required, and how much memory is required. Compute is primarily determined by the GPU. For beginners, cloud free trials are still the fastest way to experiment and learn. For serious AI developers training models regularly, the key metric becomes training throughput. The best approach is to select the GPU that delivers the highest training performance within budget constraints.

GPU Choice First, Then GPU Count

Modern NVIDIA GPUs, especially from the Ada and data center series, are common choices. In this configuration, each RTX A6000 provides 48 GB of VRAM. Once the GPU model is selected, the next decision is quantity, how many GPUs are needed to meet training or inference goals.

The Platform Has to Match the GPUs

After GPUs, the platform must be chosen. This includes deciding between a tower or rack-mounted chassis, selecting the appropriate motherboard and CPU, determining network bandwidth requirements, and deciding whether ECC memory is necessary. These factors depend on workload type, scale, and reliability needs. A proper configuration team can translate workload requirements into a precise hardware specification.

Inference Has Different Priorities Than Training

Inference-focused workloads follow a similar logic but with different priorities. Instead of raw training throughput, the focus shifts to inference speed and latency. Model size, parameter count, and dataset type matter here. Image and video models generally require significantly more VRAM than text-based models. GPUs such as NVIDIA H100, A100, and L40S are commonly used for high-performance inference scenarios.

The Build Logic Is Simple

The process is straightforward: identify the workload, select the GPU that best fits that workload, build the system around it, and then deploy the required infrastructure. With the right planning, a dedicated deep learning server becomes a long-term advantage rather than a recurring expense.

This hardware guide exists to make that process clear. We have been building enterprise-grade hardware solutions for AI companies and power users for a decade. For anyone looking to escape endless cloud bills and take full control of their AI infrastructure, this is the alternative.

Leave a Reply